Laguna Tuesday, March 22, 2022

Code optimization: Merging Colliders

Hi dear readers.

There was no post here for a long time, but I stumbled upon an interesting problem recently that I wanted to share with you: Code optimization.

The most important rule about optimization: Don't do it prematurely. Take action only if you know that something is too slow.

A software developer always has to balance three speeds which contradict each other:

- a) the runtime speed of the software

- b) the short term development speed

- c) the long term development speed

Doing a) will often be counterproductive for b) and c). Also especially c) can have a major impact on a). And of course if you are doing very good at b), a) and c) will suffer a because you are just crunching features.

The project

Together with some friends I participated in the 14th Alakajam Gamejam and we went for Top-Down RPG game where the player charater Fungus McShroom has to defeat the evil crystal lord, Pinky. Play "Funky Trip" here or take a look at the sourcecode (disclaimer: mostly crappy gamejam code).

Of course we massively overscoped, but in the end (and after some post-jam fixes) we were all quite happy with the result, especially after we added quite some quality-of-life updates and proper dialogs.

The problem

At one particular point in the development, our level artist felt really comfortable with the level editor Tiled and created a huge level. Previously we had only levels that fitted one or two screens, now this monster was around 30x30 screens big. That immediately blew our level loading time into space. The crucial part was the calculation of the physical colliders. Each tile has a blocked property and if that property is true, the physics needs to be informed that a collider has to be created.

However we do not want to have one collider per tile, as we have tens of thousands of tiles. This would be an impractical solution. So the colliders have to be merged before they can be given to the physics. In our specific case we had around 15.000 small colliders. The final number of merged colliders was 275 colliders at the end. Pretty impressive, if you ask me.

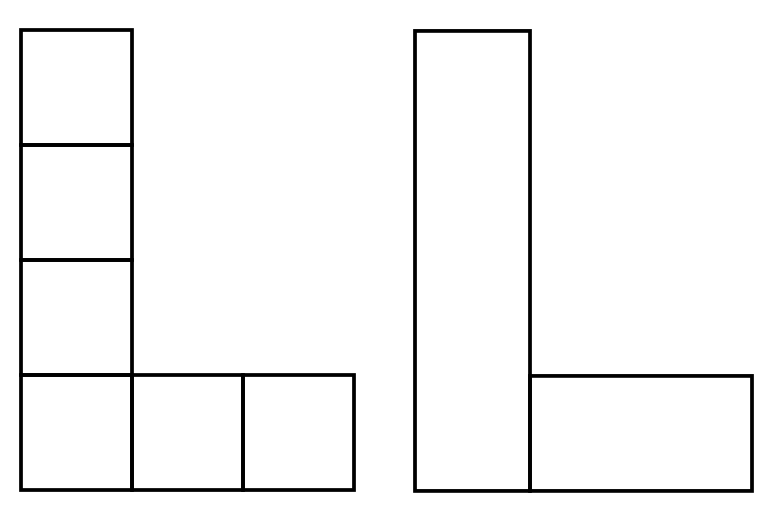

The following pictures displays the general idea for merging colliders:

As you can imagine you need to check every collider with every other collider to check if they can be merged. This sounds initially like a O(N^2) problem, which sounds really bad for this specific problem (as it can be solved faster).

Initially the code took about 60 seconds to load a level and provide the final set of colliders. The final time was reduced to around 2 seconds. All times measured on my trusty (but old) gamedeve laptop without proper statistics applied.

Optimization strategies

1. use a fast algorithm

If the algorithm is crap ( O(N^2) in a case where O(N log(N)) would suffice ), your code can be as optimized as you like, it will still be slow. I won't go into details here, as this is a very specific part, most likely not helpful to a lot of people. If you are interested, find the two versions of the algorithms here (new) and here (old). Of course the post-jam algorithm was also properly developed using TDD. My first solution was iterative and thus had to walk over the complete list of small colliders multiple times, merging two of them at a time. While this worked great for small levels it was completely unusable for large levels due to runtime and memory requirements of the algorithm. A smarter and faster approach is to combine as many colliders in one step as possible as it avoids traversing a big vector multiple times.

This got the level loading time down from 60 seconds to around 8 seconds (all times on my laptop). This is the biggest speedup here with a factor of 7.5.

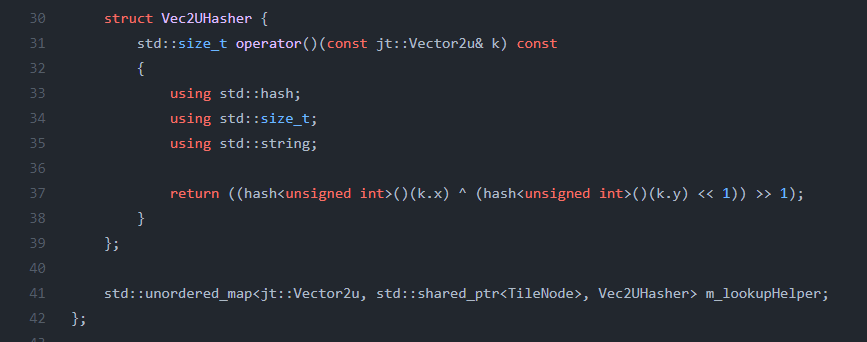

2. the power of hashing

The algorithm was now in place, but the lookup of tiles from the vector still took quite some time. std::unordered_map to the rescue. As tiles need to be looked up regularly (e.g. for merging colliders or later in game for pathfinding), this was a huge improvement. Instead of traversing the complete vector of all tiles every time and checking for the correct position (O(N)), it is way faster to use an unordered_map (basically a hashmap) and use that for lookup (O(1)). The code is pretty simple, all you need to do is provide a custom Hasher for your key, which in this case is just a tile position.

Although this was only a minor improvement for level loading, it made a big change in runtime performance on my machine: Loading time decreased from 8s to around 5 seconds. Not as big as the first one, but still a factor of around 1.6.

3. copies count

When doing optimization, it is important to know where and what to optimize. A profiler can help you with that. For Windows I really enjoyed working with Very Sleepy but of course there are other tools available e.g. google's orbit (win and linux) or cachegrind (linux only and a bit harder to use due to no gui).

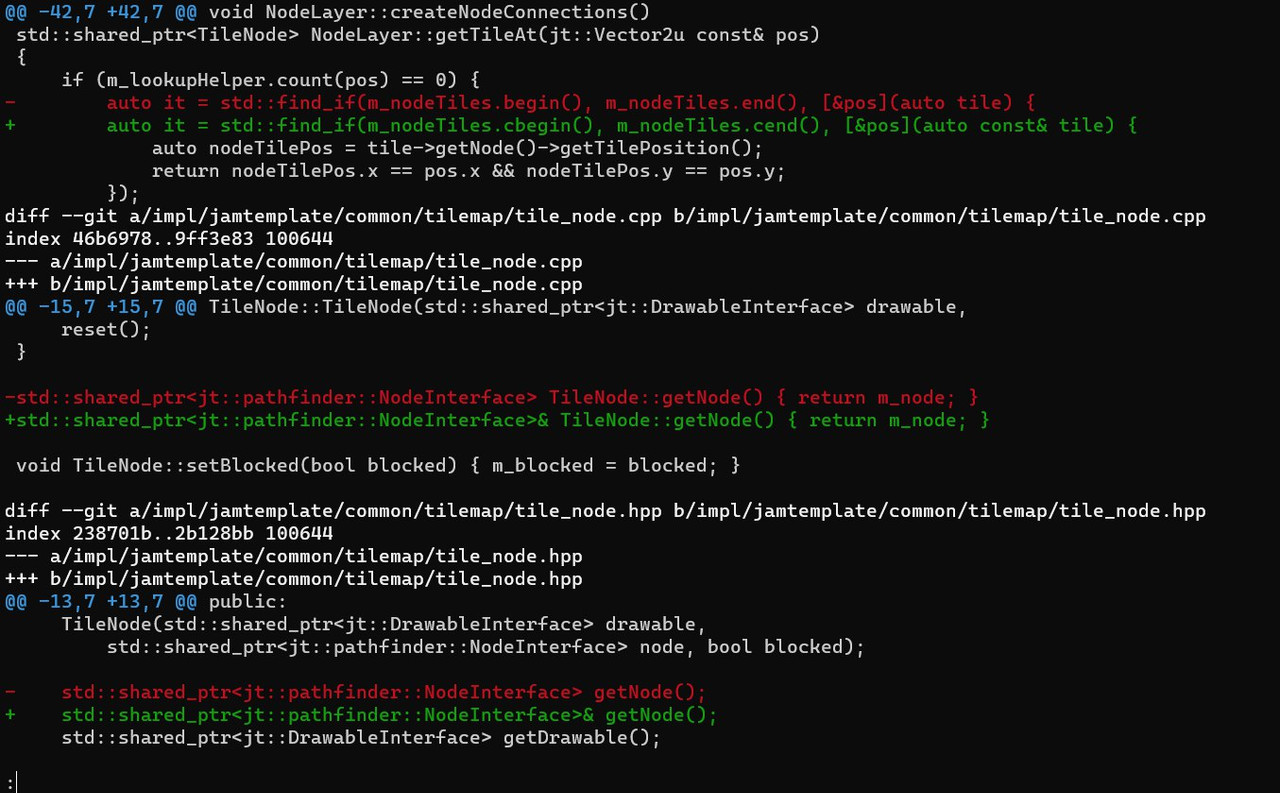

This performance analysis showed that a lot of time is spent in incref and decref functions. Those are from shared pointers and relate to the reference counter increase and decrease every time a copy of a shared pointer was created.

The simple solution after finding out that issue was to remove unnecessary copies of shared_ptr in inner loops and for temporary objects.

The change set is rather simple

But remember this is in an inner loop which gets called 100000s times. So every small bit counts. The results speak for themselves: From 5s to 2s on my machine is a speedup factor of around 2.5. For such a small change this is pretty remarkable.

Of course I am looking forward to feedback and comments.